Call the Doctor - HDF(5) Clinic

Table of Contents

- Clinic 2022-11-29

- Clinic 2022-10-04

- Clinic 2022-09-27

- Clinic 2022-08-30

- Clinic 2022-08-23

- Clinic 2022-08-16

- Clinic 2022-08-09

- Clinic 2022-08-02

- Clinic 2022-07-26

- Clinic 2022-07-19

- Clinic 2022-07-12

- Clinic 2022-07-05

- Clinic 2022-06-27

- Clinic 2022-06-21

- Clinic 2022-06-14

- Clinic 2022-06-07

- Clinic 2022-05-24

- Clinic 2022-05-17

- Clinic 2022-05-10

- Clinic 2022-04-26

- Clinic 2022-04-19

- Clinic 2022-04-12

- Clinic 2022-04-05

- Clinic 2022-03-29

- Clinic 2022-03-22

- Clinic 2022-03-15

- Clinic 2022-03-08

- Clinic 2022-03-01

- Clinic 2022-02-22

- Clinic 2022-02-15

- Clinic 2022-02-08

- Clinic 2022-02-01

- Clinic 2022-01-25

- Clinic 2022-01-18

- Clinic 2022-01-11

- Clinic 2022-01-04

- Clinic 2021-12-21

- Clinic 2021-12-07

- Clinic 2021-11-23

- Clinic 2021-11-16

- Clinic 2021-11-09

- Clinic 2021-11-02

- Clinic 2021-10-28

- Clinic 2021-10-19

- Clinic 2021-09-28

- Clinic 2021-09-21

- Clinic 2021-08-31

- Clinic 2021-08-24

- Clinic 2021-08-17

- Clinic 2021-08-10

- Clinic 2021-08-03

- Clinic 2021-07-27

- Clinic 2021-07-20

- Clinic 2021-07-13

- Clinic 2021-07-06

- Clinic 2021-06-29

- Clinic 2021-06-22

- Clinic 2021-06-15

- Clinic 2021-06-08

- Clinic 2021-06-01

- Clinic 2021-05-25

- Clinic 2021-05-18

- Clinic 2021-05-11

- Clinic 2021-05-04

- Clinic 2021-04-27

- Clinic 2021-04-20

- Clinic 2021-04-06

- Clinic 2021-03-30

- Clinic 2021-03-23

- Clinic 2021-03-16

- Clinic 2021-03-09

- Clinic 2021-03-02

- Clinic 2021-02-23

- Clinic 2021-02-09

- Goal(s)

- This is a meeting dedicated to your questions.

- In the unlikely event there aren't any

- Sometimes life deals you an HDF5 file

- Meeting Etiquette

- Be social, turn on your camera (if you've got one)

- Raise your hand to signal a contribution (question, comment)

- Be mindful of your "airtime"

- Introduce yourself

- Use the shared Google doc for questions and code snippets

- When the 30 min. timer runs out, this meeting is over.

- Notes

- Don't miss our next webinar about data virtualization with HDF5-UDF and how it can streamline your work

- Bug-of-the-Week Award (my candidate)

- Documentation update

- Clinic 2021-02-16

Clinic 2022-11-29

Your questions

- Q

- ???

Types in the HDF5 Data Model

Reference: C.J. Date: Foundation for Object / Relational Databases: The Third Manifesto, 1998.

- Data Model

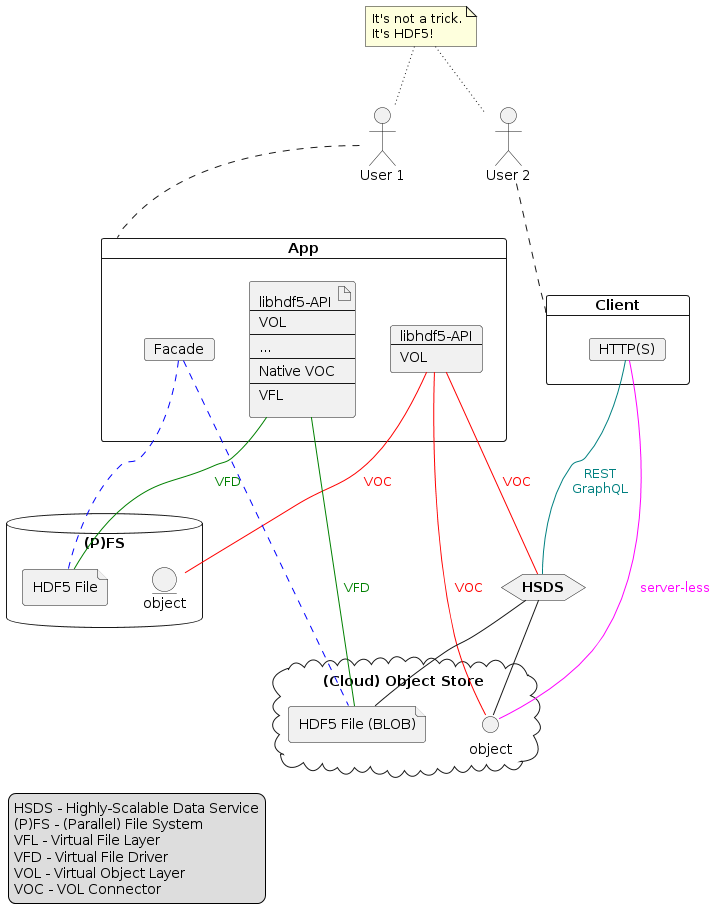

In the realm of HDF5, there are usually two data models in play.

- HDF5 Data Model

A data model is an abstract, self-contained, logical definition of the data structures, data operators, etc., that together make up the abstract machine with which users interact.

Metaphor: A data model in this sense is like a programming language, whose constructs can be used to solve many specific problems but in and of themselves have no direct connection with any such specific problem.

This is the sense in which I will be using the term 'data model.' I'm talking about HDF5 files, groups, datasets, attributes, transfers, etc.

An implementation of a given data model is a physical realization on a real machine of the components of the abstract machine that together constitute that model. There are currently two implementations of the HDF5 data model:

- HDF5 library + file format

- Highly Scalable Data Service (HSDS)

- Domain-specific Data Model

A data model is a model of the data (especially the persistent data) of some particular domain or enterprise.

Metaphor: A data model in this sense is like a specific program written in that language — it uses the facilities provided by the model, in the first sense of that term, to solve some specific problem.

We sometimes call this an HDF5 profile, the mapping of domain concepts to HDF5 data model primitives.

- HDF5 Data Model

- Types

In essence, a type is a set of (at least two) values — all possible values of some specific kind: for example, all possible integers, or all possible character strings, or all possible XML documents, or all possible relations with a certain heading (and so on).

(Some people require types to be named and finite.)

To define a type, we must:

- Specify the values that make up that type.

- Specify the hidden physical representation for values of that type. (This is an implementation issue, not a data model issue.)

- Specify a selector operator for selecting, or specifying, values of that type.

- Specify admissible type conversions and renderings.

- …

- Atomicity

The notion of atomicity has no absolute meaning; it just depends on what we want to do with the data. Sometimes we want to deal with an entire set of part numbers as a single thing, sometimes we want to deal with individual part numbers within that set—but then we’re descending to a lower level of detail, or lower level of abstraction.

- Scalar vs. Nonscalar Types

Loosely, a type is scalar if it has no user visible components and nonscalar otherwise. As atomicity, this has no absolute meaning. Some people treat 'scalar' and 'atomic' as synonymous.

- Values vs. Variables

Variables hold values. They have a location in space and time. Values "transcend" space and time.

- Questions

- What is the type of an HDF5 dataset?

- Is it a variable or a value?

- Is it scalar/nonscalar/atomic?

- What is an HDF5 datatype?

- What is the type of an HDF5 attribute?

- (How) Is an HDF5 user-defined function a dataset? (Extra credit!)

- Questions

- HDF5 Datatypes

The primary function of HDF5 datatypes is to describe the element type and physical layout of datasets, attributes, and maps. Documentation is a secondary function.

HDF5 supports a set of customizable basic types and a mechanism, datatype derivation, to compose new datatypes from existing ones. Any datatype derivation is rooted in atomic datatypes.

What's wrong with this figure? Nothing, but it's important to understand the context.

- What does 'Atomic' mean? (Not derived by composition. Composite = non-atomic = molecular)

- Why is an

Enumerationnot 'Atomic'? (Derives from a integer datatype.) - Why are

ArrayandVariable Lengthnot 'Atomic'? (Ditto. Derive from other datatype instances)

'Atomic' is an overloaded term in this context.

- Attribute values are treated as atomic under transfer by the HDF5 library

- Dataset values are not atomic because of partial I/O

- Selections

- Fields (for compounds)

- Dataset element values are atomic, except, records (values of compound)

Clinic 2022-10-04

Your questions

- Q

- ???

Last week's highlights

- Announcements

- Neil Fortner is our new Chief HDF5 Software Architect

- Happy days!

- Jobs @ The HDF Group

- Webinar Subfiling and Multiple dataset APIs: An introduction to two new features in HDF5 version 1.14

- Recording

- Slides: HDF5 Subfiling VFD & Multiple Dataset APIs

- Recent documentation updates

- Getting Started

- User Guide

- Reference Manual w/ language bindings (C, C++, Fortran, Java)

- Far from perfect but a big step forward

- Neil Fortner is our new Chief HDF5 Software Architect

- Forum

- C++ Thread unable to open file

- If you want threat-safety, use the C-API with a threat-safe build of the HDF5 library!

- Other language bindings (C++, Java, Fortran) and the high-level libs. are not thread-safe

- Performance improvements for many small datasets

- GraphQL is a bigger task

John provided an Python asynchronous I/O example

import asyncio import time async def say_after(delay, what): await asyncio.sleep(delay) print(what) async def main(): task1 = asyncio.create_task( say_after(2, 'hello')) task2 = asyncio.create_task( say_after(1, 'world')) print(f"started at {time.strftime('%X')}") # Wait until both tasks are completed (should take # around 2 seconds.) await task1 await task2 print(f"finished at {time.strftime('%X')}") asyncio.run(main())

started at 12:05:21 world hello finished at 12:05:23

- Noticeable speedup but not a panacea

Eventually I hope to have a version of

h5pydthat supportsasync(or maybe an entirely new package), that would make it a little easier to use. - Variable-Length Data in HDF5 Sketch RFC Status?

- Compound data type with zero-sized dimension

HDF5 array datatype field w/ a degenerate dimension

HDF5 "test.h5" { GROUP "/" { DATASET "dset" { DATATYPE H5T_COMPOUND { H5T_ARRAY { [2][2] H5T_IEEE_F32LE } "arr1"; H5T_ARRAY { [2][0] H5T_IEEE_F32LE } "arr2"; } DATASPACE SCALAR } } }- Use case?

- Metadata and Structure conventions

- ???

- C++ Thread unable to open file

- Engineering Corner

- Featuring: Dana Robinson

- What's new?

Starry HDF clinic line-up going forward

- 1st Tuesday of the month: Dana Robinson (HDF Engineering, Community)

- 2nd Tuesday: John Readey (HSDS, Cloud) – starting Oct 11

- 3rd Tuesday: Aleksandar Jelenak (Pythonic Science, Earth Science)

- 4th Tuesday: Scot Breitenfeld (HPC, Research)

- (In the unlikely event that there is a) 5th Tuesday: Gerd Heber + surprise guest

Clinic 2022-09-27

Your questions

- Q

- ???

Last week's highlights

- Announcements

- Thanks to Scot Breitenfeld, Aleksandar Jelenak, and John Readey for stepping up!

- Thanks to Lori the recordings are available on YouTube.

- Jobs @ The HDF Group

- Thanks to Scot Breitenfeld, Aleksandar Jelenak, and John Readey for stepping up!

- Forum

- Type confusion for chunk sizes

- Size matters

;-) - Use of

sizein APIs assize_t(C-type) orhsize_t(HDF5 API type) - What the

sizeunit?- Element count ->

hsize_t - Storage bytes ->

size_t

- Element count ->

- And we still managed to make a holy mess of it…

- Size matters

- Zstandard plugin for HDF5 does not allow negative compression levels

- Community life

- Store a group in a contiguous file space

Can one store all datasets in a group into a contiguous file space? I have an application that reads groups as units and all the datasets use the contiguous data layout. I believe this option, if available, can yield a good read performance.

- Interesting suggestions

- Elena suggested to first create all datasets with

H5D_ALLOC_TIME_LATE - Mark suggested to

H5Pget_meta_block_sizeto set aside sufficient "group space" (Maybe alsoH5Pset_est_link_info?)

- Elena suggested to first create all datasets with

- Interesting suggestions

- MSC Nastran *.H5

- HDFView "in distress" - it's not a substitute for a domain-specific viz tool

- What do people think of HDFView?

- Make HDFView available on Flathub.org

The app. has been accepted and is now available on flathub.org as org.hdfgroup.HDFView! That is HDFView 3.2.0 with HDF5 + HDF4 support for x86-64 & aarch64 CPUs.

- Let's try it!

- Performance improvements for many small datasets

- We just talked about multi-dataset I/O

- REST round-tripping

- GraphQL?

- pHDF,

H5Fflushand compression

- (Seemingly) Odd behavior of

H5Fflushin parallel with vs. w/o compression.

- (Seemingly) Odd behavior of

- Type confusion for chunk sizes

- Engineering Corner

- Featuring: Dana Robinson

- Is Dana back from his from trip?

Tips, tricks, & insights

Nada. (Just catching up on the forum…)

Clinic 2022-08-30

Your questions

- Q

- ???

Last week's highlights

- Announcements

- Webinar Vscode-h5web: A VSCode extension to explore and visualize HDF5 files

- August 31 (tomorrow) at 9:00 AM (CDT)

- Free registration

- Jobs @ The HDF Group

- New RFC

- Terminal VOL Connector Feature Flags

- How do I know if a VOL connector implements everything I need for my application?

- Webinar Vscode-h5web: A VSCode extension to explore and visualize HDF5 files

- Forum

Nothing stood out.

- Engineering Corner

- Featuring: Dana Robinson

- What do you want to see in “HDF5 2.0”?

- What else is new?

Tips, tricks, & insights

- Where is the "official" HDF5 standard?

- My colleague Aleksandar Jelenak just pointed me to HDF5 Data Model, File

Format and Library—HDF5 1.6

- An uncluttered and clear presentation

- Needs to be updated (e.g., VOL, HSDS) and revised, but still accurate

- The HDF Group maintains this HDF5 documentation

- My colleague Aleksandar Jelenak just pointed me to HDF5 Data Model, File

Format and Library—HDF5 1.6

- Proposed HDF Clinic format change

- Four themed events per month:

- Ecosystem (Aleksandar Jelenak)

- HDF5 & Python, R, Julia, other formats & frameworks, etc.

- HSDS (John Readey)

- HDF5 & Cloud, Kubernetes, server-less, etc.

- HPC (Scot Breitenfeld)

- Parallel I/O, file systems, DAOS, diagnostics, troubleshooting, etc.

- Engineering (Dana Robinson)

- Everything about getting involved and contributing to HDF5, PRs, issues, releases, new features, etc.

- We might have to adjust the weekday/time

- Thoughts?

- Four themed events per month:

Clinic 2022-08-23

Your questions

- Q

- ???

Last week's highlights

- Announcements

- Webinar Vscode-h5web: A VSCode extension to explore and visualize HDF5 files

- Aug 31, 2022 04:00 PM (Central) <– Really?

- Free Registration

- Jobs @ The HDF Group

- New blog post by Mr. HSDS (John Readey)

- HSDS Streaming

- Overcoming the

max_request_sizelimit - New performance numbers HSDS vs. HDF5 lib. and Mac Pro vs. HP Dev One

- Webinar Vscode-h5web: A VSCode extension to explore and visualize HDF5 files

- Forum

- Allocating chunks in a specific order?

- Use case: Zarr shards

- No API currently

- Potential issues:

- Relying on implementation details

- Undesirable constraints on library and tool behavior

- Performance impact

- Machine-readable units format?

- Blast from the past

- (Physical) Units are part of the datatype \(1.0 \mathrm{[m]} \neq 1.0 \mathrm{[s]}\)

- User-defined datatypes extend to user-defined units

- Datatype conversion pipeline extends to unit conversion pipeline

- Maybe time for another proposal attempt?

- C++ Read Compound dataset without taking static structure for different fieldnames

I have multiple H5 files and i am creating a generic code where i need to read specific field defined in input whether it will be an

arrayordoubleorint.- Great topic for tips and tricks…

- Allocating chunks in a specific order?

- Engineering Corner

- Featuring: Dana Robinson

- What do you want to see in “HDF5 2.0”?

- What else is new?

Tips, tricks, & insights

- HDF5 compound datatype introspection

- What's known at compile time?

- Field name(s) + in-memory type:

constexprortemplate - Field name(s) only: dataype introspection

- Field name(s) + in-memory type:

- Datatype introspection "algorithm":

- Retrieve the field in-file datatype via

H5Tget_member_indexandH5Tget_member_class/H5Tget_member_type - Retrieve the in-memory (or native) datatype via

H5Tget_native_type - Determine the size via

H5Tget_size - Construct an in-memory compound datatype via

H5Tcreate/H5Tinsert - Allocate a buffer of the right size

H5Dread& parse the buffer

- Retrieve the field in-file datatype via

- What's known at compile time?

Clinic 2022-08-16

Your questions

- Q

- ???

Last week's highlights

- Announcements

- HDF5 1.13.2 is out. Get it here!

- Highlights: Onion VFD, Subfiling VFD

- Dana?

- HDF5 1.13.2 is out. Get it here!

- Forum

- Collective VDS creation

We have data scattered across thousands of processes. But each process does not own an hyperslab of the data. It actually owns a somewhat random collection of pieces of data, which are all hyperslabs. To write this data to a file collectively, I first tried to make each process select a combination of hyperslabs using

H5Scombine_hyperslab.… Now I am trying to reproduce this behaviour using VDS: all pieces are written in a dataset in disorder. Another dataset (virtual this time) maps all pieces in the right order. It works when using 1 process!

The problem: I am unable to create this VDS in a collective way.

… -> is it possible to create a VDS collectively ?

H5Scombine_hyperslabis for point selections onlyNeil's answer RE: VDS

For the VDS question: to create a VDS collectively every process needs to add every mapping. This conforms with the way collective metadata writes in HDF5 generally work - every process is assumed to make exactly the same (metadata write) calls with exactly the same parameters. It would be possible to implement what you are describing as a high level routine which would call H5Pgetvirtual then do an allgather on the VDS mappings, but for now it’s easiest to handle it in the user application.

It’s also worth noting that there are some other limitations to parallel VDS I/O:

printfstyle mappings are not supported- The VDS must be opened collectively

- When using separate source file(s), the source file(s) cannot be opened by the library while the VDS is open.

- When using separate source file(s), data cannot be written through the VDS unless the mapping is equivalent to 1 process per source file

- All I/O is independent internally (possible performance penalty)

- Each rank does an independent open of each source file it accesses (possible performance penalty)

I should also note that VDS is not currently tested in the parallel regression test suite so there may be other issues.

- 9BN rows/sec + HDF5 support for all python datatypes

- Allocating chunks in a specific order?

- Q

- Is there a way to allocate (not necessarily write) unfiltered chunks in a specific order?

- A

- Mimic w/

H5Pset_external. Then what?

- Collective VDS creation

- Engineering Corner

- Featuring: Dana Robinson

- What do you want to see in “HDF5 2.0”?

- What else is new?

Tips, tricks, & ruminations

- Dealing w/ concurrency "in" HDF5: ZeroMQ

from datetime import datetime import h5py from time import sleep import zmq context = zmq.Context() socket = context.socket(zmq.SUB) socket.connect("tcp://localhost:5555") socket.setsockopt(zmq.SUBSCRIBE, b'camera_frame') sleep(2) with h5py.File('camera_data.hdf5', 'a') as file: now = str(datetime.now()) g = file.create_group(now) topic = socket.recv_string() frame = socket.recv_pyobj() x = frame.shape[0] y = frame.shape[1] z = frame.shape[2] dset = g.create_dataset('images', (x, y, z, 1), maxshape=(x, y, z, None)) dset[:, :, :, 0] = frame i=0 while True: i += 1 topic = socket.recv_string() frame = socket.recv_pyobj() dset.resize((x, y, z, i+1)) dset[:, :, :, i] = frame file.flush() print('Received frame number {}'.format(i)) if i == 50: break

- The HDF5 library as an application extension acts like a wall for threads

- Before that wall becomes more permeable, maybe client/server is the better approach?

- Let's have a forum discussion around a suitable HDF5 ZeroMQ protocol!

Clinic 2022-08-09

Your questions

- Q

- ???

Last week's highlights

- Announcements

- Webinar recording available now: The Hermes Buffer Organizer

- Cloud Storage Options for HDF5 - a new blog post by Mr. HSDS (John Readey)

- Upcoming HDF5 Training Workshop for intermediate and advanced users with

Scot Breitenfeld (The HDF Group)

- (Free) Registration deadline: Monday, August 15 2022

- Event date/time: Aug 31, 2022, 10:00 AM - 3:00 PM (America/Chicago)

- Note: The participation of foreign nationals is subject to certain restrictions. Check the website for details!

- Forum

- Backporting

H5Dchunk_iterto 1.12 and 1.10?

- Great contribution by Mark Kittisopikul

- Let's do more of this!

- Using HDF5 for file backed Array

Sample

// Describe the dimensions. This example would mimic a 3D regular grid // of size 10 x 20 x 30 with another 2D array of 60 x 80 at each grid point. std::array<size_t, 3> tupleDimensions = {10, 20, 30}; std::array<size_t, 2> componentDimensions = {60, 80}; // Instantiate the DataArray class as an array of floats using the file // /tmp/foo.hdf5" as the hdf5 file and “/data” as the internal hdf5 path // to the actual data DataArray data("/tmp/foo.hdf5", "/data", tupleDimensions, componentDimensions); // Now lets loop over the data for(size_t z = 0; z < 10; z++) for(size_t y = 0; y < 20; y++) for(size_t x = 0; x < 30; x++) { size_t index = // compute proper index to the tuple for(size_t pixel = 0; pixel < 80 * 60; pixel++) { data[index + pixel] = 0; } }

- Responses from Steven (Mr. H5CPP) and Rick (Mr. HDFql)

Sliding cursors are coming to HDFql

int count; HDFql::execute("SELECT FROM dset INTO SLIDING(5) CURSOR"); count = 0; // whenever cursor goes beyond last element, HDFql automatically retrieves // a new slice/subset thanks to an implicit hyperslab // (with start=[0,5,10,...], stride=5, count=1 and block=5) while(HDFql::cursorNext() == HDFql::Success) { if (*HDFql::cursorGetDouble() < 20) { count++; } } std::cout << "Count: " << count << std::endl;

H5P_set_filtercd_valuesParameter

- Start w/ the filter registry

- Filter maintainers are responsible, ultimately

- Backporting

- Engineering Corner

- Featuring: Dana Robinson

- What do you want to see in “HDF5 2.0”?

- What else is new?

Tips, tricks, & insights

- Datatype conversion - reloaded

- Q

- What if source and destination datatype are of different size?

- A

- We use a conversion buffer!

#include "hdf5.h" #include <assert.h> #include <stdint.h> #include <stdio.h> #include <stdlib.h> // our conversion function // HACK: in a production version, you would inspect SRC_ID and DST_ID, etc. herr_t B32toU64(hid_t src_id, hid_t dst_id, H5T_cdata_t *cdata, size_t nelmts, size_t buf_stride, size_t bkg_stride, void *buf, void *bkg, hid_t dxpl) { herr_t retval = EXIT_SUCCESS; switch (cdata->command) { case H5T_CONV_INIT: printf("Initializing conversion function...\n"); // do non-trivial initialization here break; case H5T_CONV_CONV: printf("Converting...\n"); uint32_t* p32 = (uint32_t*) buf; uint64_t* p64 = (uint64_t*) buf; // the conversion happens in-place // since we don't want to overwrite elements, we need to // shift/convert starting with the last element and work our // way to the beginning for (size_t i = nelmts; i > 0; --i) { p64[i-1] = (uint64_t) p32[i-1]; } break; case H5T_CONV_FREE: printf("Finalizing conversion function...\n"); // do non-trivial finalization here break; default: break; } return retval; } int main() { int retval = EXIT_SUCCESS; // in-memory bitfield datatype hid_t btfd = H5T_NATIVE_B32; // in-file unsigned integer datatype hid_t uint = H5T_STD_U64LE; // register our conversion function w/ the HDF5 library assert(H5Tregister(H5T_PERS_SOFT, "B32->U64", btfd, uint, &B32toU64) >= 0); // sample data uint32_t buf[32]; for (size_t i = 0; i < 32; ++i) { buf[i] = 1 << i; printf("%u\n", buf[i]); } hid_t file = H5Fcreate("foo.h5", H5F_ACC_TRUNC, H5P_DEFAULT, H5P_DEFAULT); hid_t fspace = H5Screate_simple(1, (hsize_t[]) {32}, NULL); hid_t dset = H5Dcreate(file, "integers", uint, fspace, H5P_DEFAULT, H5P_DEFAULT, H5P_DEFAULT); hid_t dxpl = H5Pcreate(H5P_DATASET_XFER); // we supply a conversion buffer where can convert up to 6 elements // at a time (48 = 6 x 8) unsigned char tconv[48]; H5Pset_buffer(dxpl, 48, tconv, NULL); // alternative: the HDF5 library will dynamically allocate conversion // and background buffers if we pass NULL for the buffers // H5Pset_buffer(dxpl, 48, NULL, NULL); // the datatype conversion function will be invoked as part of H5Dwrite H5Dwrite(dset, btfd, H5S_ALL, H5S_ALL, dxpl, (void*) buf); H5Pclose(dxpl); H5Dclose(dset); H5Sclose(fspace); H5Fclose(file); // housekeeping assert(H5Tunregister(H5T_PERS_SOFT, "B32->U64", btfd, uint, &B32toU64) >= 0); return retval; }

Clinic 2022-08-02

Your questions

- Q

On the tar to h5 converter: Does the (standard) compactor simply skip the >64K tar file entries if ones are encountered (w/ log or error message), or must they all conform to 64K limit? Also, what was the typical range on the number of files extracted/converted? –Robert

- A

- Currently, the code will skip entries >64K, because the underlying

H5Dcreatewill fail. (The logic and code can be much improved.) It's better to first runarchive_checker_64kand see if there are any size warnings. Theh5compactorandh5shredderwere successfully run on TAR archives with tens of millions of small images (<64K).

Last week's highlights

- Announcements

- Webinar recording "An Introduction to HDF5 for HPC Data Models, Analysis, and Performance" posted.

- Webinar Announcement: The Hermes Buffer Organizer

- Friday, August 5, 2022 at 11:00 a.m. Central Time

- Registration

- Webinar recording "An Introduction to HDF5 for HPC Data Models, Analysis, and Performance" posted.

- Forum

- Variable length list of strings

- What's a string?

- What the user meant: array of characters

- Ergo: list of variable-length strings

""= list of arrays of characters of varying length - This is not the same as an HDF5 dataset of variable-length strings

Aleksandar's example:

import h5py import numpy as np import string vlength = [3, 8, 6, 4] dt = h5py.vlen_dtype(np.dtype('S1')) with h5py.File('char-ragged-array.h5', 'w') as f: dset = f.create_dataset('ragged_char_array', shape=(len(vlength),), dtype=dt) for _ in range(len(vlength)): dset[_] = np.random.choice(list(string.ascii_lowercase), size=vlength[_]).astype('S1')

Sample output:

HDF5 "char-ragged-array.h5" { GROUP "/" { DATASET "ragged_char_array" { DATATYPE H5T_VLEN { H5T_STRING { STRSIZE 1; STRPAD H5T_STR_NULLPAD; CSET H5T_CSET_ASCII; CTYPE H5T_C_S1; }} DATASPACE SIMPLE { ( 4 ) / ( 4 ) } DATA { (0): ("g", "b", "x"), ("k", "j", "u", "u", "i", "p", "a", "t"), (2): ("t", "i", "b", "x", "u", "y"), ("q", "i", "r", "h") } } } }

- What's a string?

- Variable length list of strings

- Engineering Corner

- Featuring: Dana Robinson

- What do you want to see in “HDF5 2.0”?

- Releases on track?

Tips, tricks, & insights

- 9BN rows/sec + HDF5 support for all python datatypes

- Forum post

- Tablite GitHub repo

- Tutorial

Where is HDF5?

from tablite.config import H5_STORAGE H5_STORAGE

PosixPath('/tmp/tablite.hdf5')Simple schema: tables -> columns -> pages (via attributes)

/ Group /column Group /column/1 Dataset {NULL} /column/16 Dataset {NULL} ... /page Group /page/1 Dataset {3/Inf} /page/2 Dataset {3/Inf} ... /table Group /table/1 Dataset {NULL} /table/3 Dataset {NULL} ...

Clinic 2022-07-26

Corrections

- The VSCode extension for HDF5 works with NetCDF-4 files

- I grabbed a netCDF-3 file. Duh!

Your questions

- Q

- ???

Last week's highlights

- Announcements

- Webinar Announcement: An Introduction to HDF5 for HPC Data Models, Analysis, and Performance

- Presented by The HDF Group's one and only Scot Breitenfeld

- Final boarding call: Register here!

- VFD SWMR beta 2 release

- Testers wanted

- New blog post HSDS Docker Images by Mr. HSDS (John Readey)

- America runs on Dunkin, HSDS runs on Docker…

- DockerHub, container registries, Kubernetes,etc.

- Webinar Announcement: An Introduction to HDF5 for HPC Data Models, Analysis, and Performance

- Forum

- Looking for an hdf5 version compatible with go1.9.2

- Go HDF5!

;-)- Old software…

- Go HDF5!

- Looking for an hdf5 version compatible with go1.9.2

- Engineering Corner

- Featuring: Dana Robinson

- What do you want to see in “HDF5 2.0”?

- Evolution not revolution

- "Lessons learned" release

Tips, tricks, & insights

- Little HDF5 helpers for ML

- Use case: Tons of tiny image, audio, or video files

- Poor ML training I/O performance, especially against PFS

- Can't read w/o un-

tar-ing (uncompressing) - No parallel I/O

tar2h5- convert Tape ARchives to HDF5 files- Different optimizations

- Size optimization (compact datasets)

- Duplicate removal (SHA-1 checksum)

- Compression

- Different optimizations

- Use case: Tons of tiny image, audio, or video files

Clinic 2022-07-19

Your questions

- Q

- ???

Last week's highlights

- Announcements

- Webinar Announcement: An Introduction to HDF5 for HPC Data Models, Analysis, and Performance

- Presented by The HDF Group's one and only Scot Breitenfeld

- Register here!

- VFD SWMR beta 2 release

- Testers wanted

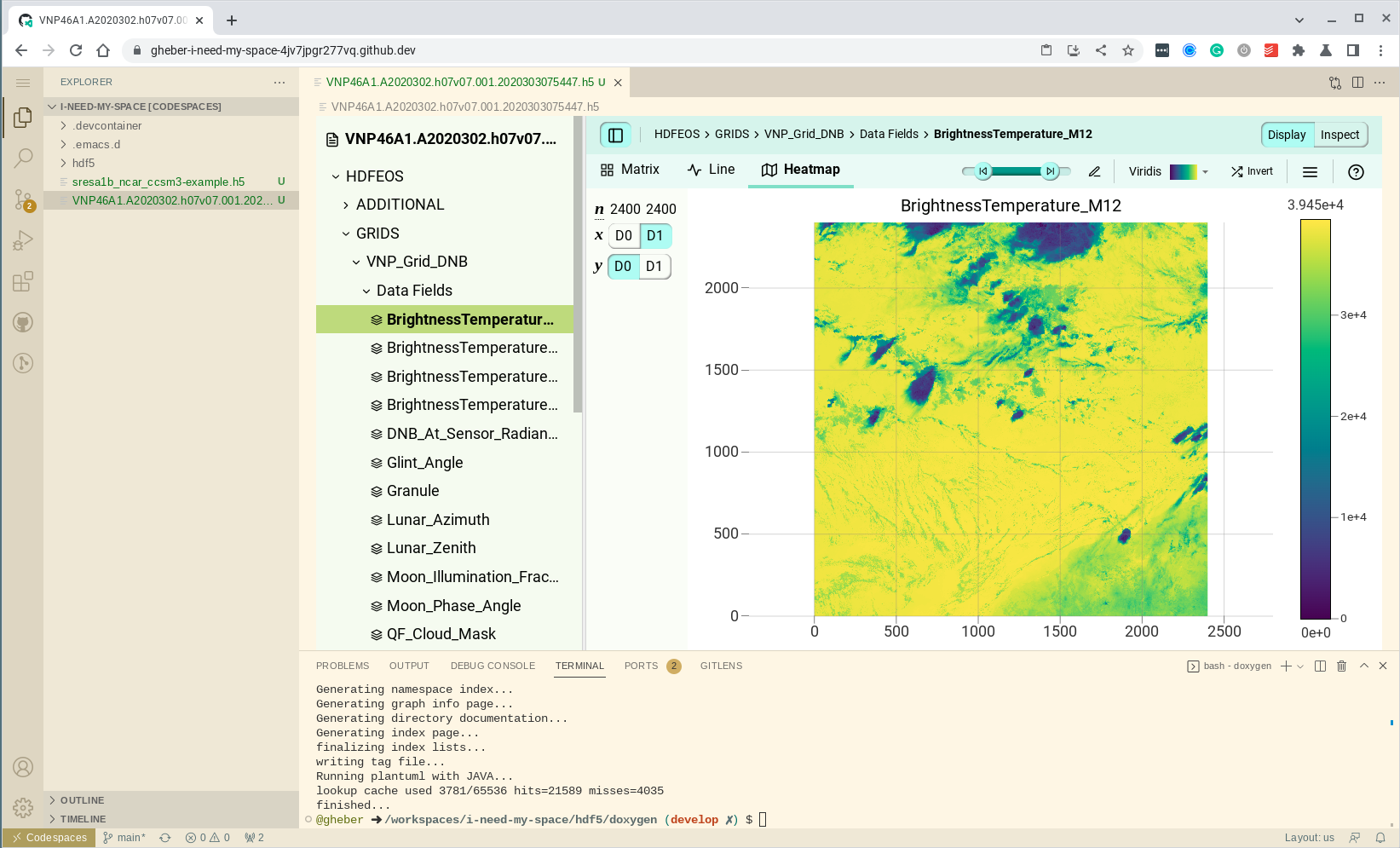

- VSCode extension for HDF5

- Brief demo later

- Webinar Announcement: An Introduction to HDF5 for HPC Data Models, Analysis, and Performance

- Forum

The week before last was quiet, but then it got hot…

ros3driver breaks randomly

- Not properly recovering from S3 read failures

- No retries

- On Python,

fsspecmight be the better option for the time being

- Not properly recovering from S3 read failures

- Memory mapping / Paging via HDF5 & VFD?

- Goes back to a HUG 2021 discussion

Maybe we don't need memory mapping API, but

What we really need is the ability to allocate space in the HDF5 file without writing. (@kittisopikulm)

- Interesting idea

- Possibility of backporting

H5Dchunk_iterto 1.12?

- 1.12 is nearing EOL

- 1.10 might be a better target

- HDFView on Windows not displaying fixed length UTF-8 string correctly

- Probably an HDFView bug

- Virtual datasets in HSDS?

- Not currently supported in HSDS, but…

- You can fake it via

H5D_CHUNKED_REF_INDIRECT

- You can fake it via

- Not currently supported in HSDS, but…

- implement hdf5 in eclipse cdt for a c tool and eclipse mingw 32 need help

- Are we failing developers?

Tips, tricks, & insights

- First look at the VSCode extension for HDF5

- If you don't have a local installation of VSCode, you can use GitHub Codespaces and run in a Web browser

- Or roll your own w/

code-serverand run in the browser

Clinic 2022-07-12

Your questions

- Q

- ???

Last week's highlights

- Announcements

- Deep Dive: HSDS Container Types

- New blog post by Mr. HSDS (John Readey)

- Webinar Announcement: An Introduction to HDF5 for HPC Data Models, Analysis, and Performance

- Presented by The HDF Group's one and only Scot Breitenfeld

- Register here!

- VFD SWMR beta 2 release

- Testers wanted

- Deep Dive: HSDS Container Types

- Forum

It was all quiet on the forum. What happened? Everybody must be happy, I guess…

Tips, tricks, & insights

- HDF5-style datatype conversion

- Use case: A lot of data coming from devices in physical experiments are

bitfields. To be useful for analysts, the data needs to be converted to

engineering types and units. Does that mean we always must store 2 or more

data copies?

- There is no intrinsic reason to have multiple copies, but there might be other reasons, e.g., performance, to maintain multiple copies.

- How do we pull this off? Filters? (No! Same datatype…)

- Datatype transformations!

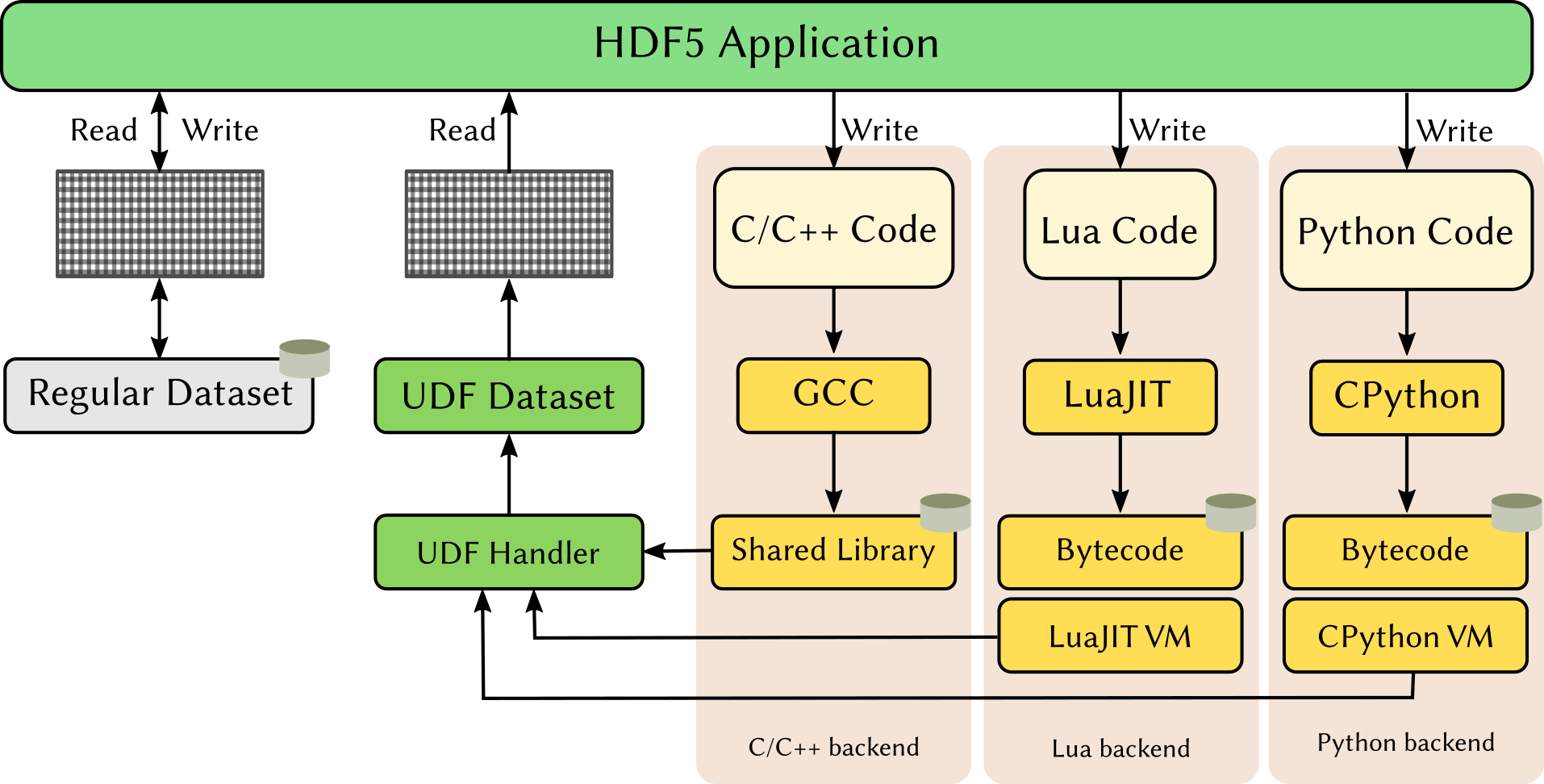

- Example: Use an in-memory bitfield representation and store as a compound w/ two fields

Code#include "hdf5.h" #include <assert.h> #include <stdint.h> #include <stdio.h> #include <stdlib.h> // our compound destination type in memory typedef struct { uint16_t low; uint16_t high; } low_high_t; // our conversion function // HACK: in a production version, you would inspect SRC_ID and DST_ID, etc. herr_t bits2cmpd(hid_t src_id, hid_t dst_id, H5T_cdata_t *cdata, size_t nelmts, size_t buf_stride, size_t bkg_stride, void *buf, void *bkg, hid_t dxpl) { herr_t retval = EXIT_SUCCESS; switch (cdata->command) { case H5T_CONV_INIT: printf("Initializing conversion function...\n"); // do non-trivial initialization here break; case H5T_CONV_CONV: printf("Converting...\n"); // the conversion function simply splits and swaps bitfield halves low_high_t* ptr = (low_high_t*) buf; for (size_t i = 0; i < nelmts; ++i) { uint16_t swap = ptr[i].low; ptr[i].low = ptr[i].high; ptr[i].high = swap; } break; case H5T_CONV_FREE: printf("Finalizing conversion function...\n"); // do non-trivial finalization here break; default: break; } return retval; } int main() { int retval = EXIT_SUCCESS; // in-memory bitfield datatype hid_t btfd = H5T_NATIVE_B32; // compound of two unsigned shorts hid_t cmpd = H5Tcreate(H5T_COMPOUND, 4); H5Tinsert(cmpd, "low", 0, H5T_NATIVE_USHORT); H5Tinsert(cmpd, "high", 2, H5T_NATIVE_USHORT); // register our conversion function w/ the HDF5 library assert(H5Tregister(H5T_PERS_SOFT, "bitfield->compound", btfd, cmpd, &bits2cmpd) >= 0); // notice that the conversion function is its own inverse assert(H5Tregister(H5T_PERS_SOFT, "compound->bitfield", cmpd, btfd, &bits2cmpd) >= 0); // sample data uint32_t buf[32]; for (size_t i = 0; i < 32; ++i) { buf[i] = 1 << i; printf("%ud\n", buf[i]); } // we could check the conversion in-memory, in-place //H5Tconvert(btfd, cmpd, 32, buf, NULL, H5P_DEFAULT); hid_t file = H5Fcreate("cmpd.h5", H5F_ACC_TRUNC, H5P_DEFAULT, H5P_DEFAULT); hid_t fspace = H5Screate_simple(1, (hsize_t[]) {32}, NULL); hid_t dset = H5Dcreate(file, "shorties", cmpd, fspace, H5P_DEFAULT, H5P_DEFAULT, H5P_DEFAULT); // the datatype conversion function will be invoked as part of H5Dwrite H5Dwrite(dset, btfd, H5S_ALL, H5S_ALL, H5P_DEFAULT, (void*) buf); // the datatype conversion function will be invoked as part of H5Dread H5Dread(dset, btfd, H5S_ALL, H5S_ALL, H5P_DEFAULT, (void*) buf); for (size_t i = 0; i < 32; ++i) printf("%ud\n", buf[i]); H5Dclose(dset); H5Sclose(fspace); H5Fclose(file); // housekeeping assert(H5Tunregister(H5T_PERS_SOFT, "compound->bitfield", cmpd, btfd, &bits2cmpd) >= 0); assert(H5Tunregister(H5T_PERS_SOFT, "bitfield->compound", btfd, cmpd, &bits2cmpd) >= 0); H5Tclose(cmpd); return retval; }

- Datatype conversions do what it says on the tin and sometimes are just what you need

- Don't confuse them with filters!

- With HDF5-UDF it is possible to implement datatype conversions via filters, but at the expense of additional datasets

- Use case: A lot of data coming from devices in physical experiments are

bitfields. To be useful for analysts, the data needs to be converted to

engineering types and units. Does that mean we always must store 2 or more

data copies?

Clinic 2022-07-05

Your questions

- Q

- ???

Last week's highlights

- Announcements

- VFD SWMR beta 2 release

- Testers wanted

- VFD SWMR beta 2 release

- Forum

- HDF5 1.10, 1.12 dramatic drop of performance vs 1.8

- Recap:

h5open_f/h5close_foverhead, chunk size 1 issue… User provided a nice table

chunk size data (bytes) metadata (bytes) 1 120000 1128952 50 120000 26456 2048 122880 3400 131072 524288 3400 - No compression

- Steven Varga suggested that

- The application might benefit from a dedicated I/O handler, and he provided a multithreaded queue example

- Data generationn can be decoupled from recording via ZeroMQ, and he provided an example with the generator (sender) in Fortran and the recorder (receiver) in C

- Recap:

- Issue w/ memory backed files

- We are able to reproduce the issue!

- Slowdown in

H5Oget_infobecause ofH5O_INFO_ALLoption - The difference between the in-file and in-memory image sizes can be explained by incremental (re-)allocation in the core VFD

The real issue is this:

HDF5-DIAG: Error detected in HDF5 (1.13.2-1) thread 0: #000: H5F.c line 837 in H5Fopen(): unable to synchronously open file major: File accessibility minor: Unable to open file #001: H5F.c line 797 in H5F__open_api_common(): unable to open file major: File accessibility minor: Unable to open file #002: H5VLcallback.c line 3686 in H5VL_file_open(): open failed major: Virtual Object Layer minor: Can't open object #003: H5VLcallback.c line 3498 in H5VL__file_open(): open failed major: Virtual Object Layer minor: Can't open object #004: H5VLnative_file.c line 128 in H5VL__native_file_open(): unable to open file major: File accessibility minor: Unable to open file #005: H5Fint.c line 1964 in H5F_open(): unable to read superblock major: File accessibility minor: Read failed #006: H5Fsuper.c line 450 in H5F__super_read(): unable to load superblock major: File accessibility minor: Unable to protect metadata #007: H5AC.c line 1396 in H5AC_protect(): H5C_protect() failed major: Object cache minor: Unable to protect metadata #008: H5C.c line 2368 in H5C_protect(): can't load entry major: Object cache minor: Unable to load metadata into cache #009: H5C.c line 7315 in H5C__load_entry(): incorrect metadata checksum after all read attempts major: Object cache minor: Read failed

- It appears that the superblock checksum (in versions 2, 3) is not correctly set or updated

- Maybe this is a corner case of the use of HDF5 file image w/ core VFD? Investigating…

- Authenticated AWS S3

- Use of the read-only S3 VFD w/ Python?

The ros3 driver is very slick. It works great for public h5 files.

I am trying to perform a read of an H5 file using ros3 that requires authentication credentials. I have been successful in using h5ls/h5dump in accessing an h5 file against an authenticated AWS S3 call using –vfd=ros3 and –s3-cred=(<region,<keyid>,<keysecret>).

However, I cannot get this to work with h5py.

- The user answered his on question,

h5pydoes support this VFD and AWS credentials as documented here - He also commented that this is not limited to AWS S3, but also works with other S3-compatible storage options

- And he's looking for HSDS on GCP (Google Cloud)

- HDF5 1.10, 1.12 dramatic drop of performance vs 1.8

Tips, tricks, & insights

- What others are doing with HDF5

- Subscribe for alerts on Google Scholar

- Alert query

hdf5- …

- Type

- Most relevant results

- You will get 2-4 emails a week with about 6-10 citations

- Recent highlights

- Fission Matrix Processing Using the MCNP6.3 HDF5 Restart File

- See the Wikipedia article for Monte Carlo N-Particle Transport Code

Towards A Practical Provenance Framework for Scientific Data on HPC Systems

So we derive an I/O-centric provenance model, which enriches the W3C PROV standard with a variety of concrete sub-classes to describe both the data and the associated I/O operations and execution environments precisely with extensibility.

Moreover, based on the unique provenance model, we are building a practical prototype which includes three major components: (1) Provenance Tracking for capturing diverse I/O operations; (2) Provenance Storage for persisting the captured provenance information as standard RDF triples; (3) User Engine for querying and visualizing provenance.

Stimulus: Accelerate Data Management for Scientific AI applications in HPC

… a lack of support for scientific data formats in AI frameworks. We need a cohesive mechanism to effectively integrate at scale complex scientific data formats such as HDF5, PnetCDF, ADIOS2, GNCF, and Silo into popular AI frameworks such as TensorFlow, PyTorch, and Caffe. To this end, we designed Stimulus, a data management library for ingesting scientific data effectively into the popular AI frameworks. We utilize the StimOps functions along with StimPack abstraction to enable the integration of scientific data formats with any AI framework. The evaluations show that Stimulus outperforms several large-scale applications with different use-cases such as Cosmic Tagger (consuming HDF5 dataset in PyTorch), Distributed FFN (consuming HDF5 dataset in TensorFlow), and CosmoFlow (converting HDF5 into TFRecord and then consuming that in TensorFlow) by 5.3x, 2.9x, and 1.9x respectively with ideal I/O scalability up to 768 GPUs on the Summit supercomputer. Through Stimulus, we can portably extend existing popular AI frameworks to cohesively support any complex scientific data format and efficiently scale the applications on large-scale supercomputers.

-

DIARITSup is a chain of various software following the concept of ”system of systems”. It interconnects hardware and software layers dedicated to in-situ monitoring of structures or critical components. It embeds data assimilation capabilities combined with specific Physical or Statistical models like inverse thermal and/or mechanical ones up to the predictive ones. It aims at extracting and providing key parameters of interest for decision making tools. Its framework natively integrates data collection from local sources but also from external systems. DIARITSup is a milestone in our roadmap for SHM Digital Twins research framework. Furthermore, it intends providing some useful information for maintenance operations not only for surveyed targets but also for deployed sensors.

Meanwhile, a recorder manage the recording of all data and metadata in the Hierarchical Data Format (HDF5). HDF5 is used to its full potential with its Single-Writer-Multiple-Readers feature that enables a graphical user interface to represent the saved data in real-time, or the live computation of SHM Digital Twins models for example. Furthermore, the flexibility of HDF5 data storage allows the recording of various type of sensors such as punctual sensors or full field ones.

- It's impossible to keep up, but always a great source of inspiration!

- Fission Matrix Processing Using the MCNP6.3 HDF5 Restart File

- Subscribe for alerts on Google Scholar

- Next time: datatype conversions

Clinic 2022-06-27

Your questions

- Q

- ???

Last week's highlights

- Announcements

- VFD SWMR beta 2 release

- Testers wanted

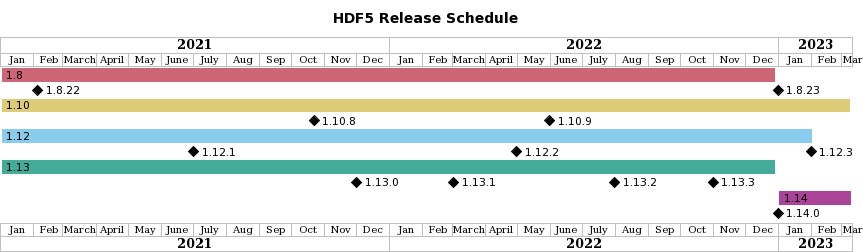

- 2022 HDF5 Release Schedule announced

- HDF5 1.8.x and 1.12.x are coming to an end (1.8.23, 1.12.3)

- HDF5 1.10.x and 1.14.x are here to stay for a while

- Performance work over the Summer

- HDF5 1.13.2: Selection I/O, VFD SWMR, Onion VFD (late July/early August)

- HDF5 1.13.3: Multi-dataset I/O, Subfiling (late September/early October)

- HDF5 1.14.0 in late December/early January

- We are hiring

- Director of Software Engineering

- Chief HDF5 Software Architect

- Interested in working for The HDF Group? Send us your resume!

- Speed up cloud access using multiprocessing! by John Readey (Mr. HSDS)

Each file is slightly more than 1 TB in size, so downloading the entire collection would take around a month with a 80 Mbit/s connection. Instead, let’s suppose we need to retrieve the data for just one location index, but for the entire time range 2007-2014. How long will this take?

- VFD SWMR beta 2 release

- Forum

- How efficient is HDF5 for data retrieval as opposed to data storage?

I would like to a keyed 500GB table into HDF5, and then then retrieve rows matching specific keys.

For an HDF5 file, items like all the data access uses an integer “row” number, so seems like I would have to implement a 'key to row number map" outside of HDF5.

Isn’t retrieval more efficient with a distributed system like Spark which uses HDFS?

- Too open-ended question w/o additional context

- There are no querying or indexing capabilities built into HDF5

- Can something like this be implemented on top of HDF5? It's been done many times, for specific requirements.

- Are other tools more efficient? Maybe. Maybe not.

- HDF5 1.10, 1.12 dramatic drop of performance vs 1.8

Profiling (gperftools) revealed

85% of the time is spent in

H5Fget_obj_count.- The user correctly inferred that this is related to frequent

h5open_f/h5close_f, and an issue we covered in the past- Bug in

h5f_get_obj_count_f& fixed in PR#1657 by Scot.

- Bug in

- After applying that fix performance is on par w/ 1.8

Happy ending! (almost)

h5stat output: ... Summary of file space information: File metadata: 1201344 bytes Raw data: 99260 bytes Amount/Percent of tracked free space: 0 bytes/0.0% Unaccounted space: 17392 bytes Total space: 1317996 bytes

- Greater than 1:10 data to metadata ratio

- Usually means trouble

- Culprit: chunk size of 1

- Greater than 1:10 data to metadata ratio

- Issue w/ memory backed files

- Still puzzled

- How efficient is HDF5 for data retrieval as opposed to data storage?

Tips, tricks, & insights

- SWMR (old) and compression "issues" - take 2

- Free-space management is disabled in the original SWMR implementation

- Can lead to file bloat when compression is enabled & overly aggressive flushing

- Question: How does the new SWMR VFD implementation behave?

- Untested, but should work in principle

- There is an updated VFD SWMR RFC

Clinic 2022-06-21

Your questions

- Q

- ???

Last week's highlights

- Announcements

- VFD SWMR beta 2 release

- Testers wanted

- HDFView 3.1.4 released

- Some confusion around versioning

- HDFView 3.2.x series is based on the HDF5 1.12.x releases.

- HDFView 3.1.x series is based on the HDF5 1.10.x releases.

- HDFView 3.3.x series will be based on the future 1.14.x releases.

- Known issue in 3.2.0: HDFView crashes on an attribute of VLEN of REFERENCE

- Some confusion around versioning

- VFD SWMR beta 2 release

- Forum

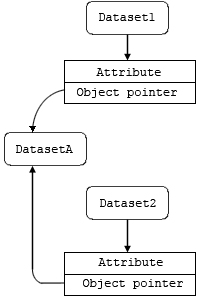

- Check if two identifiers refer to the same object

H5Imodule- Identifiers are transient

- Pre-defined and user-defined identifier types

- Pre-defined IDs identify HDF5 objects but also VFL and VOL plugins, etc.

- See

H5I_type_t

- See

- User-defined ID types must be first registered w/ the library before use

Certain functions are only available for user-defined IDs

[1] H5Iobject_verify: Object atom/Unable to find ID group information cannot call public function on library type

- Assuming pre-defined HDF5 IDs for objects, use

H5Oget_infoto retrieve a structure that contains an address or token which can then be compared

- Issue w/ memory backed files

- Workflow:

- Create a memory-backed file (w/ core VFD)

- Make changes

- Get a file image (in memory) and share w/ another process (shared mem. or network)

- Create another memory-backed file from this file image

- Should work just fine w/

H5P[g,s]et_file_image

- Workflow:

- Multithread Writing to two files

- Some users don't know how to help themselves.

- Check if two identifiers refer to the same object

Tips, tricks, & insights

- SWMR (old) and compression "issues"

- Reports:

- Elena answered in both cases

- It's not a bug but a "feature"

- File space recycling is disabled in SWMR mode

- Every time a dataset chunk is flushed, new space is allocated in the file

- How to alleviate?

- Keep the chunk in cache & flush only once it's baked

- Don't compress edge chunks

- Bigger picture: We need a feature (in-)compatibility matrix! Long overdue!

- Question: How does the new SWMR implementation behave?

Clinic 2022-06-14

Your questions

- Q

- ???

Last week's highlights

- Announcements

- Hermes 0.7.0-beta release

- Bug fixes

- Google OR tools replaced by GLPK

- VFD SWMR beta 2 release

- Testers wanted

- HUG 2022 Europe videos available

- Agenda and YouTube playlist

- Thanks to Lori Cooper

- Hermes 0.7.0-beta release

- Forum

- Multithread Writing to two files

Reading Image data from single file and writing to two h5 files using threads. Getting run time error doing this. What could be the reason ?

- It turns out the user is using high-level library (

hdf5_hl.h) functions, e.g.,H5IMmake_image_24bit - These functions are not thread-safe, even if the underlying HDF5 library was built w/ thread-safety enabled

- Workaround: Use the thread-safe core library and follow the specification, such as HDF5 Image and Palette Specification Version 1.2

- It turns out the user is using high-level library (

H5Rdestroywhen stored

If a reference is stored as an attribute to an object, should

H5Rdestroystill be called?- Yes

- Fake it till you make it: GANs with HDF5

- Never the same HDF5 dataset (value) twice!

- A clever combination of HDF5-UDF and generative adversarial networks (GAN)

- Competition between two neural networks a (synthetic-) data generator and a discriminator

- TensorFlow Keras API supports storing trained model weights in HDF5 files

- We can collocate the HDF5-UDF to synthesize data with the generator model

- Reading from this dataset creates a new sample every time

- Multithread Writing to two files

Tips, tricks, & insights

- HDF5 command-line tools and HDF5 path names w/ special characters

- Example:

/equilibrium/time_slice[]&profiles_2d[]&theta_SHAPE - We have a problem!

h5ls

First try

gerd@penguin:~$ h5ls equilibrium.h5/equilibrium/time_slice[]&profiles_2d[]&theta_SHAPE [2] 8959 [3] 8960 -bash: profiles_2d[]: command not found -bash: theta_SHAPE: command not found [3]+ Exit 127 profiles_2d[] gerd@penguin:~$ time_slice[]**NOT FOUND** ^C [2]+ Exit 1 h5ls equilibrium.h5/equilibrium/time_slice[] gerd@penguin:~$

Double quotes to the rescue

gerd@penguin:~$ h5ls "equilibrium.h5/equilibrium/time_slice[]&profiles_2d[]&theta_SHAPE" time_slice[]&profiles_2d[]&theta_SHAPE Dataset {107/Inf, 3/Inf, 2/Inf}

h5dump

First attempt

gerd@penguin:~$ h5dump -pH -d "/equilibrium/vacuum_toroidal_field&b0" equilibrium.h5 HDF5 "equilibrium.h5" { DATASET "/equilibrium/vacuum_toroidal_field&b0" { DATATYPE H5T_IEEE_F64LE DATASPACE SIMPLE { ( 107 ) / ( H5S_UNLIMITED ) } STORAGE_LAYOUT { CHUNKED ( 107 ) SIZE 656 (1.305:1 COMPRESSION) } FILTERS { COMPRESSION DEFLATE { LEVEL 1 } } FILLVALUE { FILL_TIME H5D_FILL_TIME_IFSET VALUE -9e+40 } ALLOCATION_TIME { H5D_ALLOC_TIME_INCR } } }Second attempt

gerd@penguin:~$ ~/packages/bin/h5dump -pH -d "/equilibrium/time_slice[]&profiles_2d[]&z_SHAPE" equilibrium.h5 HDF5 "equilibrium.h5" {h5dump error: unable to get link info from "/equilibrium/time_slice[]&profiles_2d"}

Not good. Need to investigate.

- Example:

Clinic 2022-06-07

Your questions

- Q

- ???

Last week's highlights

- Announcements

- Release of HDF5-1.10.9

- Parallel compression improvements

- Doxygen-based User Guide draft

- HDF5 is now tested and supported on macOS 11.6 M1

- VS 2015 will no longer be supported starting in 2023

- VFD SWMR beta 2 release

- Testers wanted

- 2022 European HDF5 Users Group

- Was a HUGE success

- Thanks to Lana Abadie of ITER, Andy Gotz of ESRF, and Lori Cooper of HDFG

- Stay tuned for the presentation videos!

- They will be posted on the YouTube channel

- Release of HDF5-1.10.9

- Forum

- Make HDFView available on Flathub.org

There's been some progress

Mostly working except filesystem portal integration, which makes the entire thing pretty useless for now, the code may need patching…

- Not sure what that means

- HDF5 write perf - Regular API vs Direct Chunk on uncompressed dataset

- Samuel posted

pprofprofiles- Need time to digest

- Samuel posted

- Make HDFView available on Flathub.org

Tips, tricks, & insights

- I can't create a compound datatype with XXX (too many) fields

- Cause: size limitation of the datatype message

- Possible solutions:

- Break up the compound type into multiple compound types

- Use a group and make each field a dataset

- Use an opaque type and store metadata to parse

- Use multiple (extendible) 2D datasets

- One dataset for each field datatype

- Keep the field metadata (names, order) in attributes

Clinic 2022-05-24

Your questions

- Q

- Element order preservation in point selections?

- Q

- ???

Last week's highlights

- Announcements

- 2022 European HDF5 Users Group (HUGE)

- HDF5 1.10.9-2-rc-1 source available for testing

- Parallel Compression improvements

- First steps toward a Doxygen-based “User Guide”

- HDF5 is now tested and supported on macOS 11.6 M1

- 2022 European HDF5 Users Group (HUGE)

- Forum

- Survey on the usage of self-describing data formats

- A minor step towards thread concurrency

- Idea

- Drop the global lock (temporarily) whenever we are performing file I/O

- Interesting idea, but devil's in the details (memory/file-hybrid state)

- Make HDFView available on

flathub.org

- Again, very interesting idea

- How can we help?

- VSCode extension for HDF5

- Someone started it already. Duh!

- How "complete" is it? (What are the requirements?)

- How can it be improved?

- We'll discuss this at HUG Europe 2022

- HDF5 write perf - Regular API vs Direct Chunk on uncompressed dataset

Some good news: no performance regression from HDF5 1.8.22

- direct chunk hyperslab chunk hyperslab contiguous regular 19.060877 38.609770 23.500788 never fill 19.578496 19.223011 24.316146 latest fmt 19.697542 35.719180 24.503973 never fill + latest format 19.084984 18.970671 24.817945 HDF5 1.12.1

- direct chunk hyperslab chunk hyperslab contiguous regular 21.050749 35.889814 23.982538 never fill 18.917586 20.929941 23.291541 latest fmt 19.921759 35.472971 23.935622 never fill + latest format 19.646342 19.800098 24.406450 - Question remains: Why is

hyperslab contiguousconsistently 25% slower than the chunky versions?- Need to dig deeper

- Read the code or … (both)

- gperftools (

pprof) output visualized w/[q,k]cachegrind

- Need to dig deeper

- Survey on the usage of self-describing data formats

Tips, tricks, & insights

- Awkward arrays

- Project home

- Awkward Array: Manipulating JSON-like Data with NumPy-like Idioms

- SciPy 2020 presentation by Jim Pivarski

- Watch this!

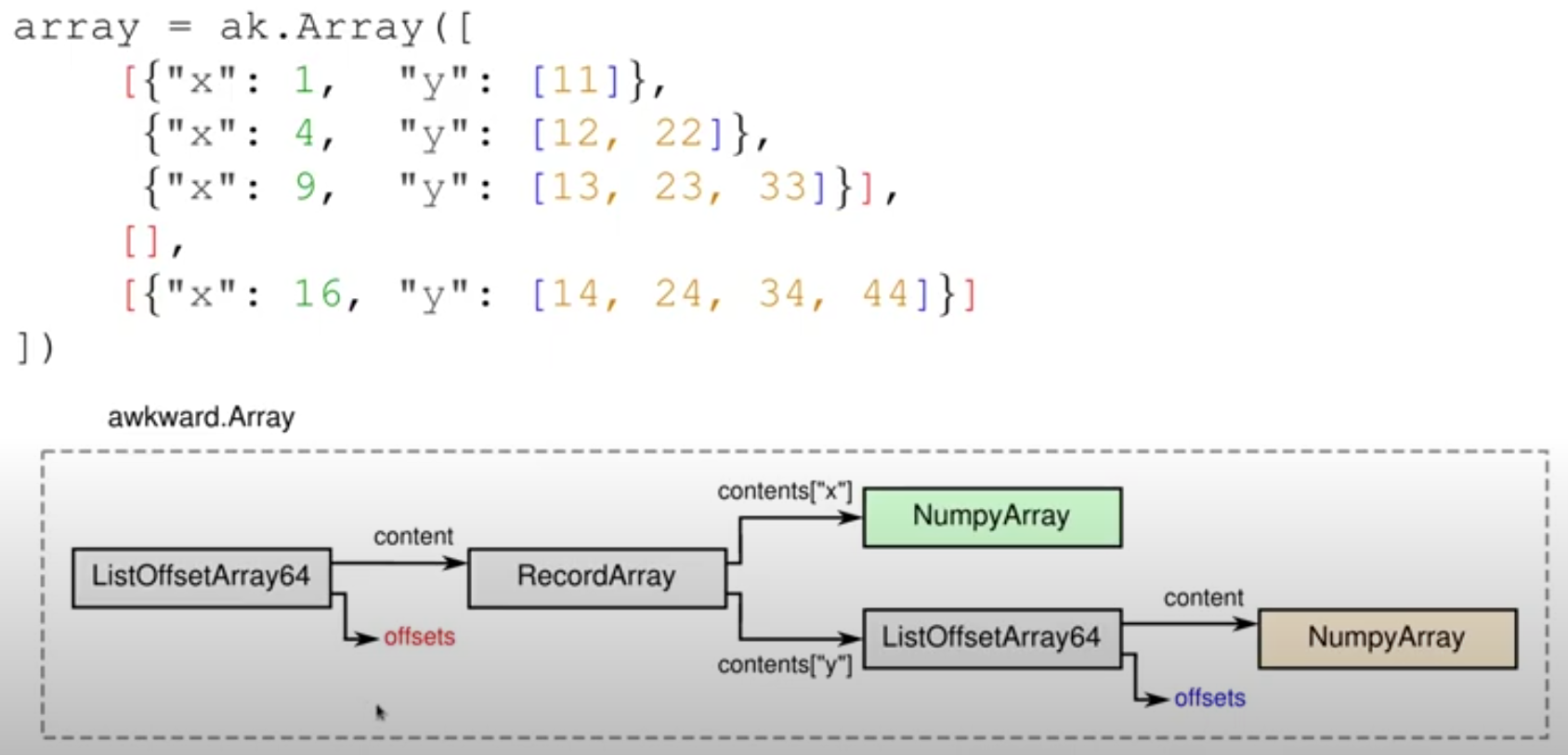

How would you represent something like this in HDF5? (Example from Jim's video)

import awkward as ak array = ak.Array([ [{"x": 1, "y": [11]}, {"x": 4, "y": [12, 22]}, {"x": 9, "y": [13, 23, 33]}], [], [{"x": 16, "y": [14, 24, 34, 44]}] ])

- Compound? Meh! Empty or partial records? Variable-length sequences…

=:-O - Columnar layout

- Pick an iteration order

- Put record fields into contiguous buffers

Keep track list offsets

outer offsets: 0, 3, 3, 5 content for x: 1, 4, 9, 16 offsets for y: 0, 1, 3, 6, 10 content for y: 11, 12, 22, 13, 23, 33, 14, 24, 34, 44

- A picture is worth a thousand words (screenshot from Jim's presentation)

- See the HDF5 structure?

- You can store both iteration orders, if that's what you need

- See the HDF5 structure?

- Compound? Meh! Empty or partial records? Variable-length sequences…

- Food for thought: Sometimes HDF5 is about finding balance between appearance and performance

- We will have a few HEP (High-Energy Physics)-themed presentations at HUG

Europe 2022

- You have been warned

;-)

- You have been warned

Clinic 2022-05-17

Your questions

- Q

- Element order preservation in point selections?

- Q

- ???

Last week's highlights

- Announcements

- 2022 European HDF5 Users Group (HUGE)

- Release of HDFView 3.2.0

- SWMR support (configurable refresh timer)

- Display and edit attribute data in table views, the same way as datasets

- Read and display non-standard floating-point numbers

- HDF5 VOL Status Report – Exascale Computing Project

- Includes early DAOS VOL connector performance numbers

- HSDS Data Streaming Arrives

- 100 MB (configurable) cap on HTTP request size

- Otherwise

413 - Payload Too Largeerror - Increasing the

max_request_sizecan help only if HSDS Docker container or Kubernetes pod has sufficient RAM - Now what? Streaming to the rescue!

- Let clients see bytes returning from the server while that is still processing the tail chunks in the selection

- Otherwise

- 100 MB (configurable) cap on HTTP request size

- 2022 European HDF5 Users Group (HUGE)

- Forum

- HDF5 write perf - Regular API vs Direct Chunk on uncompressed dataset

- We provided a little test program to eliminate as many layers as possible

The user ran the tests and obtained these results (seconds via

clock_gettime(CLOCK_PROCESS_CPUTIME_ID,.)):- direct chunk hyperslab chunk hyperslab contiguous regular 1.631005 3.368258 2.069583 never fill 1.632858 1.641345 2.069205 latest fmt 1.643421 3.233817 2.068250 never fill + latest format 1.633859 1.611976 2.029573 - Why is

hyperslab contiguousconsistently 25% slower than the chunky versions?- Perhaps some extra buffer copying going on here??? TBD

- Failures with insufficient disk space

- The HDF5 library state appears to be inconsistent after a disk-full error

(

ENOSPC)- What's the state of a file in that situation? (Undefined)

- Recovering to the last sane state is harder than it may seem, if not impossible

- But things appear to be worse: The library crashes (assertion failure) on

shutdown

- Open handle accounting is screwed up

- What's the state of a file in that situation? (Undefined)

- See the reproducer in last week's notes!

- It appears that the problem might be Windows-specific.

- @Dana tried to reproduce the problem under Linux and couldn't

- Nobody has come forward so far with a non-Windows error report

- The HDF5 library state appears to be inconsistent after a disk-full error

(

- Corrupted file due to shutdown

Thank you for all your help and all of the very useful pointers. I managed to recover the data in the file. I did not get all the names of the datasets but with the mentioned attribute i was able to reconstruct the data.

Thank you and all the best, Christian

- Great!

- A lost business opportunity

;-), but also a sign that skill, determination, and documentation go a long way - Our way always was and will be: Open-Source Software and Specifications

- HDF5 write perf - Regular API vs Direct Chunk on uncompressed dataset

Tips, tricks, & insights

Next time…

Clinic 2022-05-10

Your questions

- Q

- ???

Last week's highlights

- Announcements

- 2022 European HDF5 Users Group (HUGE)

- Hermes 0.6.0-beta release

- Highlight: Hermes HDF5 Virtual File Driver

- GitHub

- HDF5 1.12.2 is out

- Parallel compression improvements

- Support for macOS Apple M1 11.6 Darwin 20.6.0 arm64 with Apple clang version 12.0.5.

- The shortened versions of the long options (ex:

--datasinstead of--dataset) have been removed from all the tools.

- Sharing Experience on HDF5 VOL Connectors Development and Maintenance

- Wednesday, May 11, 1:00-2:00 PM ET

- Part of the ECP 2022 Community BOF days

- Open registration

- 2022 European HDF5 Users Group (HUGE)

- Forum

- Select mulptiple hyperslabs in some order

- (Hyperslab) Selections can be combined via set-theoretic operations (union, intersection, …)

- There's an implicit (C or Fortran) ordering of the selected grid points, i.e., (within commutativity rules of set theory) the order of those operations doesn't matter

- What about point selections?

hdf5vshffile

- Naming conventions (extensions) for HDF5 files

- Technically, it doesn't matter

- Tools, such as HDFView, use search filters, e.g.,

*.h5and*.hdf5

- HDF5 write perf - Regular API vs Direct Chunk on uncompressed dataset

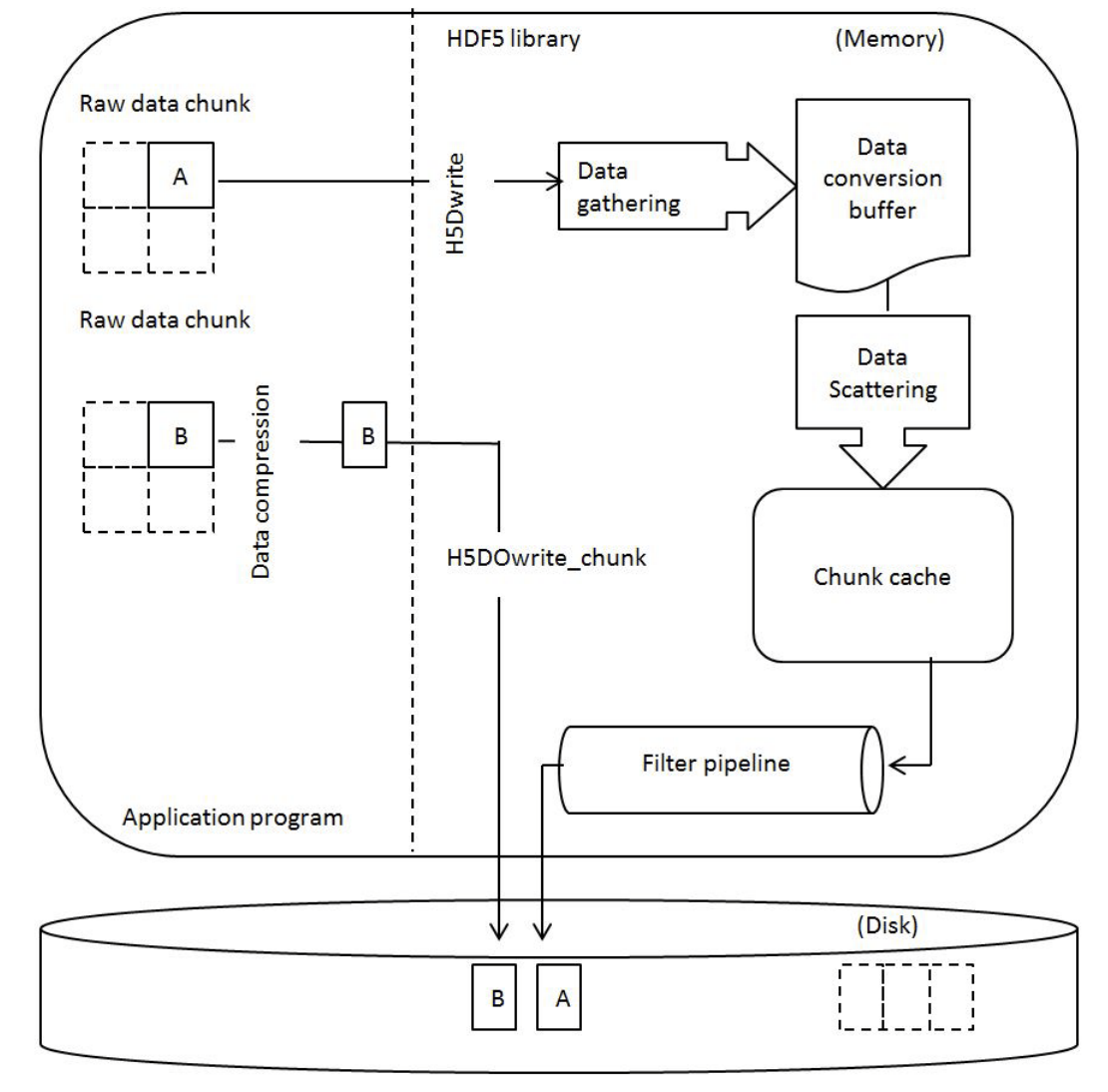

h5pyhaswrite_directandwrite_direct_chunk- About a 50% difference in performance. Why?

- What could slow down

H5Dwrite(vsH5Dwrite_chunk) when having no data conversion, no data scattering (contiguous layout), no chunking, no filters?

- Failures with insufficient disk space

- The HDF5 library state appears to be inconsistent after a disk-full error

(

ENOSPC)- What's the state of a file in that situation? (Undefined)

- Recovering to the last sane state is harder than it may seem, if not impossible

- But things appear to be worse: The library crashes (assertion failure) on

shutdown

- Open handle accounting is screwed up

- What's the state of a file in that situation? (Undefined)

Reproducer

#include "hdf5.h" #include <stdint.h> #include <stdlib.h> #define SIZE (1024 * 1024 * 128) int work(const char* path) { int retval = EXIT_SUCCESS; uint8_t* data = (uint8_t*) malloc(sizeof(uint8_t)*SIZE); for (size_t i = 0; i < SIZE; ++i) { *(data+i) = i % 256; } hid_t fapl = H5I_INVALID_HID; if ((fapl = H5Pcreate(H5P_FILE_ACCESS)) == H5I_INVALID_HID) { retval = EXIT_FAILURE; goto fail_fapl; } if (H5Pset_fclose_degree(fapl, H5F_CLOSE_STRONG) < 0) { retval = EXIT_FAILURE; goto fail_file; } hid_t file = H5I_INVALID_HID; if ((file = H5Fcreate(path, H5F_ACC_TRUNC, H5P_DEFAULT, fapl)) == H5I_INVALID_HID) { retval = EXIT_FAILURE; goto fail_file; } hid_t group = H5I_INVALID_HID; if ((group = H5Gcreate(file, "H5::Group", H5P_DEFAULT, H5P_DEFAULT, H5P_DEFAULT)) == H5I_INVALID_HID) { retval = EXIT_FAILURE; goto fail_group; } hid_t fspace = H5I_INVALID_HID; if ((fspace = H5Screate_simple(1, (hsize_t[]) {(hsize_t) SIZE}, NULL)) == H5I_INVALID_HID) { retval = EXIT_FAILURE; goto fail_fspace; } hid_t dset = H5I_INVALID_HID; if ((dset = H5Dcreate(group, "H5::Dataset", H5T_NATIVE_UINT8, fspace, H5P_DEFAULT, H5P_DEFAULT, H5P_DEFAULT)) == H5I_INVALID_HID) { retval = EXIT_FAILURE; goto fail_dset; } if (H5Dwrite(dset, H5T_NATIVE_UINT8, fspace, fspace, H5P_DEFAULT, data) < 0) { retval = EXIT_FAILURE; goto fail_write; } printf("Write succeeded.\n"); if (H5Fflush(file, H5F_SCOPE_GLOBAL) < 0) { retval = EXIT_FAILURE; goto fail_flush; } printf("Flush succeeded.\n"); fail_flush: fail_write: if (H5Dclose(dset) < 0) { printf("H5Dclose failed.\n"); } fail_dset: if (H5Sclose(fspace) < 0) { printf("H5Sclose failed.\n"); } fail_fspace: if (H5Gclose(group) < 0) { printf("H5Gclose failed.\n"); } fail_group: if (H5Fclose(file) < 0) { printf("H5Fclose failed.\n"); } fail_file: if (H5Pclose(fapl) < 0) { printf("H5Pclose failed.\n"); } fail_fapl: return retval; } int main() { int retval = EXIT_SUCCESS; retval &= work("O:/foo.h5"); // limited available space to force failure retval &= work("D:/foo.h5"); // lots of free space return retval; }

- The HDF5 library state appears to be inconsistent after a disk-full error

(

- External datasets relative to current directory or HDF5 file as documented?

- Documentation error: Thanks to the user who reported it.

- Select mulptiple hyperslabs in some order

Tips, tricks, & insights

Next time…

Clinic 2022-04-26

Your questions

- Q

- ???

Last week's highlights

- Announcements

- Forum

- Bug? H5TCopy of empty enum

- Is an empty enumeration datatype legit?

- I couldn't find that ticket @Elena mentioned a while ago

- Where is it?

- Bug? H5TCopy of empty enum

Tips, tricks, & insights

- Documentation - variable-length datatype description seems incomplete (doesn't mention global heap)

Interesting GitHub issue

I'm working on trying to decode an HDF5 file (attached) manually using the specification (for the purpose of writing rust code for a decoder that quickly extracts raw signal data from nanopore FAST5 files)…

- Tools of the trade

h5dump,h5debug,h5check - Highlights gaps/inaccuracies/inconveniences in the file format specification

- Bit-level representation of variable-length string datatypes

- What's a parent type? (spec. doesn't say)

- What's the parent type of a VLEN string? (character)

- (How) Is it encoded in the datatype message?

- Use of the global heap for VLEN data

- Not discussed where VLEN datatype message is discussed

- Bit-level representation of variable-length string datatypes

Sample

HDF5 "perfect_guppy_3.6.0_LAST_gci_vs_Nb_mtDNA.fast5" { GROUP "/" { ATTRIBUTE "file_version" { DATATYPE H5T_STRING { STRSIZE H5T_VARIABLE; STRPAD H5T_STR_NULLTERM; CSET H5T_CSET_UTF8; CTYPE H5T_C_S1; } DATASPACE SCALAR DATA { (0): "2.0" } } GROUP "read_44dcef85-283e-4782-b7b1-1c9a0f682597" { ATTRIBUTE "run_id" { DATATYPE H5T_STRING { STRSIZE H5T_VARIABLE; STRPAD H5T_STR_NULLTERM; CSET H5T_CSET_ASCII; CTYPE H5T_C_S1; } DATASPACE SCALAR DATA { (0): "cb45e5bda47a362d52bcfa146df9083b463bf65e" } } ...2.0\0is322e 3000in hex.- Let's find it!

Clinic 2022-04-19

Your questions

- Q

- ???

Last week's highlights

- Announcements

- 2022 European HDF5 Users Group (HUG)

- HDF5 1.12.2-3-rc-1 source available for testing

- Parallel compression improvements backported from HDF5 1.13.1

- HSDS v0.7beta13

- Support for Fancy Indexing

- Unlike with h5py though, HSDS works well with long lists of indexes by parallelizing access across the chunks in the selection.

- For example, retrieving 4000 random columns from a 17,520 by 2,018,392

dataset demonstrated good scaling as the number of HSDS nodes was increased:

- 4 nodes: 65.4s

- 8 nodes: 35.7s

- 16 nodes: 23.4 s

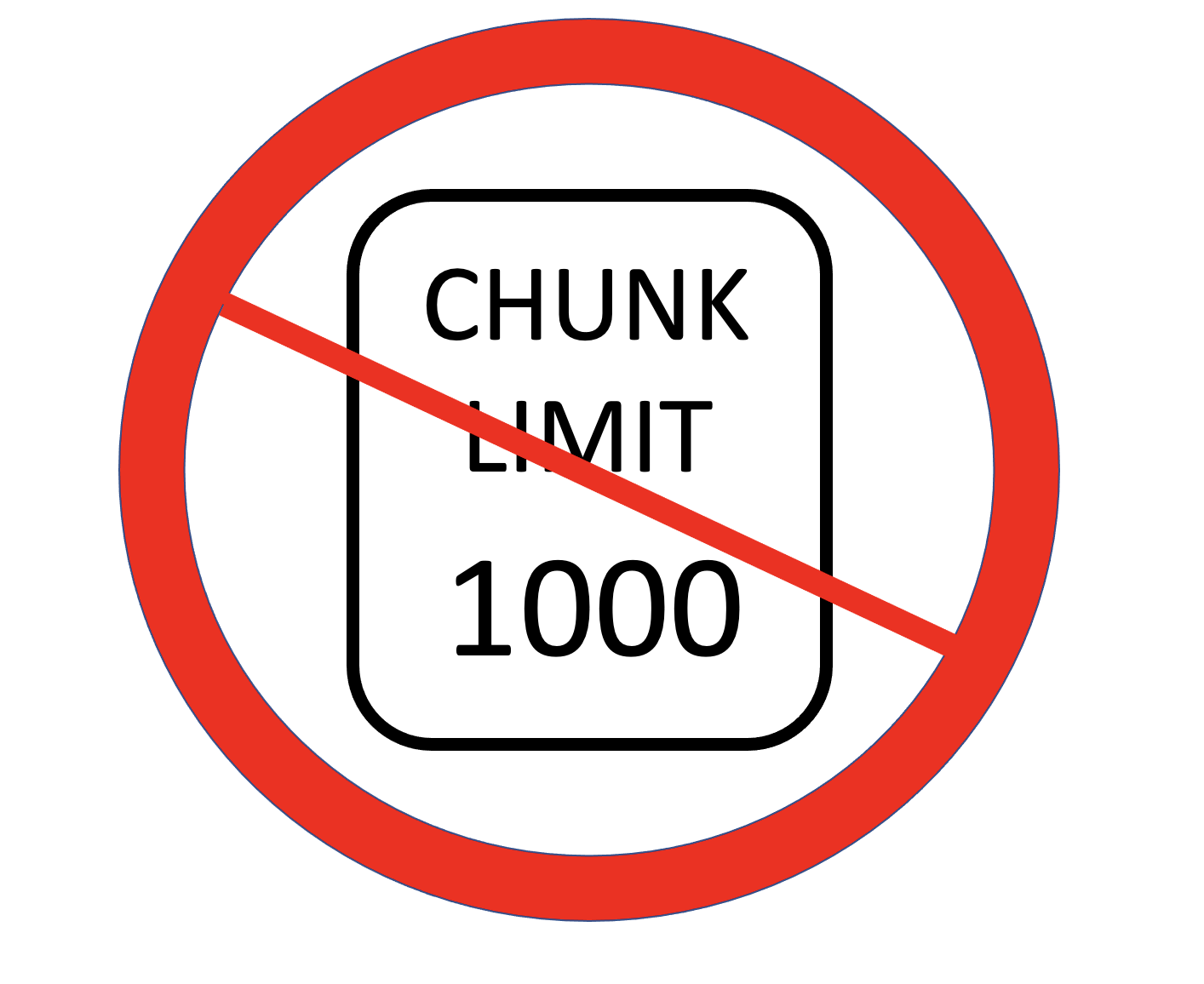

- The limit on the number of chunks accesses per request has been removed

- Support for Fancy Indexing

- 2022 European HDF5 Users Group (HUG)

- Forum

- Help Request regarding Java 3.3.2

- Switched from HDF Java 2.6.1 to 3.3.2 & seeing errors not seen before

- Artifact of checking for HDF5 Image convention attributes

- Prior versions ignored exceptions silently

8-( - Now we have "expected errors"???

h5f_get_obj_count_fExtremely Buggy?

- User is trying to use

h5fget_obj_count_fto establish the number of open handles; it appears that the result depends on the number of timesh5open_fwas called (???) - Calling

H5openw/ the C-API is usually not necessary and multiple calls have no side-effects h5open_f(Fortran API) appears to behave differently, buggy?- Fixed by Scot Breitenfeld in HDFFV-11306 Fixed #1657

- What a turnaround!

- User is trying to use

- C++ Read h5 cmpd (/struct) dataset that each field is vector

User's data

typedef struct { std::vector FieldA, FieldB, FieldC; } TestH5; TestH5.FieldA = {1.0,2.0,3.0,4.0}; // similar to FieldB, FieldC

- How to read this

TestH5using C++?

This brings us to today's …

- Help Request regarding Java 3.3.2

Tips, tricks, & insights

- How H5CPP makes you ask the right questions

(All quotations from Steven Varga's response!)

The expression below is a [templated] Class datatype in C++, placed in a non-contiguous memory location, requiring scatter-gather operators and a mechanism to dis-assemble reassemble the components. Because of the complexity AFAIK there is no automatic support for this sort of operation.

template <typename T> struct TestH5{ std::vector<T> FieldA, FieldB, FieldC; };

The structure above maybe modelled in HDF5 in the following way:

- (Columnar)

/group/[fieldA, fieldB, fieldC]fast indexing by columns, more complex and slower indexing by rows; also easier read/write from Julia/Python/R/C/ etc… - (Records) by a vector of tuples:

std::vector<std::tuple<T,T,T>>where you work with a single dataset, fast indexing by rows and slower indexing by columns - (Blocked) exotic custom solution based on direct chunk write/read: fast indexing of blocks by row and column wise at the increased complexity of the code.

- (Hybrid) …

H5CPP provides mechanism for the first two solutions:

TestH5<int> data = {std::vector<int>{1,2,3,4}, std::vector<int>{5,6,7}, std::vector<int>{8,9,10}}; h5::fd_t fd = h5::create("example.h5",H5F_ACC_TRUNC); h5::write(fd, "/some_path/fieldA", data.fieldA); h5::write(fd, "/some_path/fieldB", data.fieldB); h5::write(fd, "/some_path/fieldC", data.fieldC);

Ok the above is simple and well behaved, the second solution needs a POD struct backing, as tuples are not supported in the current H5CPP version (the upcoming will support arbitrary STL)

struct my_t { int fieldA; int fieldB; int fieldC; }

You can have any data types and arbitrary combination in the POD struct, as long as it qualifies as POD type in C++. This approach involves H5CPP LLVM based compiler assisted reflection – long word, I know; sorry about that. The bottom line you need the type descriptor and this compiler does it for you, without lifting a pinky.

std::vector<my_t> data; h5::fd_t fd = h5::create("example.h5",H5F_ACC_TRUNC); h5::write(fd, "some_path/some_name", data);

This approach is often used in event recorders, hence there is this

h5::appendoperator to help you out:h5::ds_t ds = h5::open(...); for(const auto& event: event_provider) h5::append(ds, event);

Both of the layouts are used to model sparse matrices, the second resembling COO or coordinate of points, whereas the first is for Compressed Sparse Row|Column format.

Slides are here, the examples are here.

best wishes: steve

Go on and read the rest of the thread! There is a lot of good information there (layouts, strings, array types, …).

- (Columnar)

Clinic 2022-04-12

Your questions

- Q

- ???

Last week's highlights

- Announcements

- Forum

- What kind of STL containers do you use in your field?

- Does anybody use the STL?

;-)

- Does anybody use the STL?

- Bug? H5TCopy of empty enum

- Looks like one

- Broken HDF5 file cannot be opened

- Don't make a fuzz!

;-) - Cornering the HDF5 library by feeding it random byte sequences as attribute and link names (or values)

- Mission accomplished!

- Don't make a fuzz!

- Automated formatted display of STL-like containers

g++ -I./include -o pprint-test.o -std=c++17 -DFMT_HEADER_ONLY -c pprint-test.cpp g++ pprint-test.o -lhdf5 -lz -ldl -lm -o pprint-test ./pprint-test LISTS/VECTORS/SETS: --------------------------------------------------------------------- array<string,7>:[xSs,wc,gu,Ssi,Sx,pzb,OY] vector:[CDi,PUs,zpf,Hm,teO,XG,bu,QZs] deque:[256,233,23,89,128,268,69,278,130] list:[95,284,24,124,49,40,200,108,281,251,57, ...] forward_list:[147,76,81,193,44] set:[V,f,szy,v] unordered_set:[2.59989,1.86124,2.93324,1.78615,2.43869,2.04857,1.69145] multiset:[3,5,12,21,23,28,30,30] unordered_multiset:[gZ,rb,Dt,Q,Ark,dW,Ez,wmE,GwF] ADAPTORS: --------------------------------------------------------------------- stack<T,vector<T>>:[172,252,181,11] stack<T,deque<T>>:[54,278,66,70,230,44,121,15,58,149,224, ...] stack<T,list<T>>:[251,82,278,86,66,40,278,45,211,225,271, ...] priority_queue:[zdbUzd,tTknDw,qorxgk,mCcEay,gDeJ,FYPOEd,CIhMU] queue<T,deque<T>>:[bVG,Bbs,vchuT,FfxEw,CXFrr,JAx,sVlcI] queue<T,list<T>>:[ARPl,dddmHT,mEiCJ,OVEYS,FIJi,jbQwb,tpJnpj,rlCRoKn,nBKjJ,KPlU,jatsUI, ...] ASSOCIATE CONTAINERS: --------------------------------------------------------------------- map<string,int>:[{LID:2},{U:2},{Xr:1},{e:2},{esU:1},{kbj:1},{qFc:3}] map<short,list<string>>:[{LjwUkey:5},{jZxhk:6},{sxKKVu:8},{vSmHmu:8},{wRBTdGS:7}] multimap<short,list<int>>:[{ALpPkqbJ:[6,6,8,7,8,5,7,8,5,5,6, ...]},{AwsHR:[8,5,6,6,5,6,7,6,7,6,8, ...]},{HtLQMvHv:[5,7,6,7,8,6,7]},{KbseLYEs:[5,8,6,8]},{RzsJm:[7,6,8,7,7,7,7,6,6,8,7, ...]},{XpNSkhDa:[7,5,8,8,7,8,5,5,5]},{cXPImNk:[6,8,6,5,8,7,5,6,6,8,6, ...]},{gkKHyh:[5,8,6,6,6,6,5,5,6]},{iPmaraP:[7,6,7,6,7,7,5,7,5,7,7, ...]},{pLmqL:[6,5,5,5,6]}] unordered_map<short,list<string>>:[{udXahPXD:7},{hUgYjak:5},{OpOmaBqA:7},{vTldeWdS:5},{jEHQST:8},{UZxId:7},{IslGsnGY:8}] unordered_multimap<short,list<int>>:[{JldxFw:[5,6,8,6,6]},{tnzhP:[8,6,8,5,5,8,8,8]},{cvMaS:[5,7,5,5,5,5]},{eGlyp:[8,7,8,8,7]}] RAGGED ARRAYS: --------------------------------------------------------------------- vector<vector<string>>:[[pkwZZ,lBqsR,cmKt,PDjaS,Zj],[Nr,jj,xe,uC,bixzV],[uBAU,pXCa,fZEH,FIAIO],[Vczda,HKEzO,ySqr,Fjd,nh,pgb,zcsw],[fLCgg,qQ,Reul,aTXp,DENn,ZDtkV,VXcB]] array<vector<short>,N>:[[29,49,29,42,25,33,49,33,44,49],[50,48,35,22,35,33,33],[46,27,23,20,48,38,45,28,45],[25,33,41,22,36]] array<array<short,M>,N>:[[90,35,99],[47,58,53],[82,25,72],[76,92,62],[39,88,32]] MISC: --------------------------------------------------------------------- pair<int,string>:{6:hJnCm} pair<string,vector<int>>:{iLclgkjnoY:[2,5,6,6,7,3,7,3,3,4,8, ...]} tuple<string,int,float,short>:<XTK,3,2.63601,6> tuple<string,list<int>,vector<float>,short>:<[TaPryDWKv,attpFqqIc,geHwbX,vdZ,kvruDeaxpZ,dSOqbVpr,jTciLPgBbI,duc,yUZiCP,zGrTsweTk,LNouX, ...],4,5.81555,[2,3,5,6,8]> - Group Overhead - File size problem for hierarchical data

We found that a group has quite a memory overhead (was it 2 or 20 kb?).

- How was that measured?

- What's in a group?

- Responses from Steven, John, and GH

- The figure quoted looks unusual/too high

H5Pset_est_link_inforegression in 1.13.1?

- Yup, looks like it!

- Compact vs. dense group storage

- The documentation has a few clues

- Corrupted file due to shutdown

I have a problem with a HDF5 file created with h5py. I have the suspicion that the process writing to the file was killed.

Try common household tools

strings -t d file.h5

- Then reach for the heavier guns (

h5check)

- What kind of STL containers do you use in your field?

Clinic 2022-04-05

Your questions

- Q

- ???

Last week's highlights

- Announcements

- Forum

- File remains open after calling

HD5F.close

- Context: .NET,

HDF5.PInvokemigrating from 1.8.x to 1.10.x - Biggest change: switch to 64-bit handles

- Symptoms: Change in unit test behavior, file closure

- User forgot to switch from

inttolongin one place C-style

typedefhas no equivalent in C#; hack:#if HDF5_VER1_10 using hid_t = System.Int64; #else using hid_t = System.Int32; #endif

- How to discover open handles?

H5Fget_obj_count,H5Fget_obj_idsH5Iiterate(since HDF5 1.12)

- Context: .NET,

- File remains open after calling

Tips, tricks, & insights

Clinic 2022-03-29

Your questions

- Q

- ???

Last week's highlights

- Announcements

- Free seminar: Using HDF5 and HSDS for Open Science Research at Scale

- Friday, April 1, 2022, 11:00 am - 12:30 pm EDT

- 2022 European HDF5 Users Group (HUG)

- Free seminar: Using HDF5 and HSDS for Open Science Research at Scale

- Forum

- Error: Read failed

- Got a sample, nothing unusual

- Core VFD works just fine

- Need to find a way to reproduce the behavior (I/O kernel)

- Mystery continues

- Symbolic links with relative path

- What's the meaning of 'relative' in HDF5? W.r.t. handle (file, group, …)!

..does and cannot mean what it does in a shell (no tree structure)- Special characters in link names? (

/,.,./,/./) ..,..., etc., and spaces are legitimate link names

- Refresh object so that external changes were applied

- The state of an open HDF5 file is a hybrid (in-memory, in-file)

- Simultaneously opening an HDF5 file in different processes with one or more

writers, without inter-process communication, creates a coherence problem!

(and potentially a consistency problem…)

- Don't go there!

- Use SWMR, IPC, or I/O request queue!

- Read Portion of Dataset

- That's what HDF5 is all about

- Great responses from a number of people

- Most comprehensive example by Steven (H5CPP)

- Error: Read failed

Tips, tricks, & insights

Clinic 2022-03-22

Your questions

- Q

- ???

Last week's highlights

- Announcements

- Free seminar: Using HDF5 and HSDS for Open Science Research at Scale

- Friday, April 1, 2022, 11:00 am - 12:30 pm EDT

- 2022 European HDF5 Users Group (HUG)

- Free seminar: Using HDF5 and HSDS for Open Science Research at Scale

- Forum

- Check if Hard/Soft/External link is broken

- How to detect broken soft or external links?

H5Lexistschecks only for link existence in a group, not validity of destination

- Not a perfect match, but

H5Oexists_by_namemight do the trick

- How to detect broken soft or external links?

- A problem when saving

NATIVE_LDOUBLEvariables

- Interesting discussion around

NATIVE_LDOUBLE - Compiler and processor dependent => you are opening a can of worms

- Two options:

- User-defined FP datatype + soft conversions (relatively safe)

- Opaque datatype + metadata (dangerous)

- Interesting discussion around

- Error: Read failed

- Mystery continues

- Trying to get a sample

- Use the core VFD to check if it's potentially a POSIX VFD/Windows issue